Orchestration & Virtual Machines

Have Virtual Machines & Kubernetes work in concert with GPUs and experience an exponential boost in performance of resource-intensive applications and workloads

The most flexible, affordable & user-friendly AI-Optimized GPU platform – pre-plumbed for Containers, Virtual Machines and Bare-metal

Have Virtual Machines & Kubernetes work in concert with GPUs and experience an exponential boost in performance of resource-intensive applications and workloads

High bandwidth, data security, control, low latency, and unmetered GPU usage are just a few advantages that make a GPU cluster on a private cloud a powerful and scalable solution for performance hungry workloads

CDS offers high-availability GPU services, providing businesses with cutting-edge computing power for demanding tasks

CDS also provides volume discounts and custom pricing for enterprises with large-scale GPU requirements

We offers scalable GPU services designed to meet the evolving demands of businesses, allowing seamless scaling of GPU resources as workloads increase.

CDS offers a comprehensive range of GPU services designed to accelerate high-performance computing tasks such as AI, machine learning, data processing, and simulations.

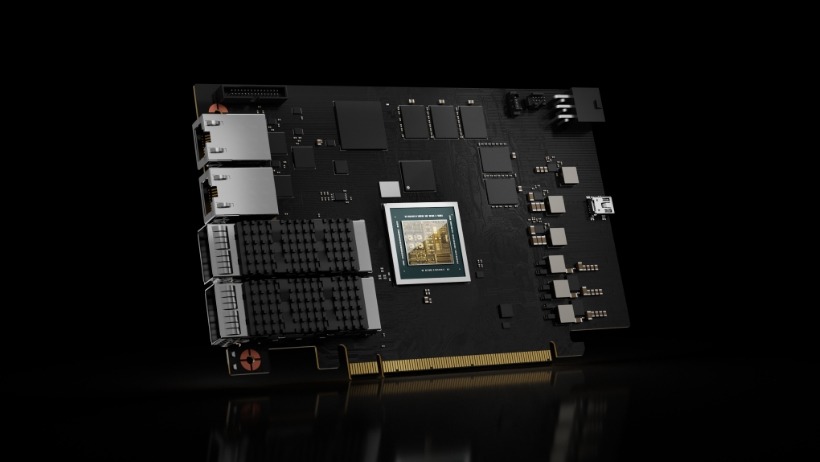

CDS is excited to introduce NVIDIA Blackwell, the latest in cutting-edge GPU architecture designed to revolutionize AI, deep learning, and high-performance computing. With unmatched speed and efficiency, Blackwell GPUs offer unparalleled performance for complex computations, making them ideal for data-intensive tasks.

Nearly 80% more powerful computational throughput compared to the “Hopper” H100 previous generation. The “Blackwell” B100 is the next generation of AI GPU performance with access to faster HBM3E memory and flexibly scaling storage.

A Blackwell x86 platform based on an eight-Blackwell GPU baseboard, delivering 144 petaFLOPs and 192GB of HBM3E memory. Designed for HPC use cases, the B200 chips offer the best-in-class infrastructure for high-precision AI workloads.

The “Grace Blackwell'' GB200 supercluster promises up to 30x the performance for LLM inference workloads. The largest NVLink interconnect of its kind, reducing cost and energy consumption by up to 25x compared to the current generation of H100s.

Select from the latest generation of high-end NVIDIA GPUs designed for AI workloads. Our team can advise you on the ideal GPU selections, network and storage configurations for your use case.

The NVIDIA H100 SXM is available on-demand and reserved instances, offering up to 80GB of HBM3 memory and up to 3.35TB/s bandwidth, delivering exceptional performance for demanding workloads.

Available Q3 by Request

The NVIDIA GH200 Grace Hopper™ available on request with up to 144GB of HBM3e and up to 4.9TB/s memory bandwidth – 1.5x more bandwidth than the H100.

Available Q3 by Request

The NVIDIA GH200 Grace Hopper™ available on request with up to 144GB of HBM3e and up to 4.9TB/s memory bandwidth – 1.5x more bandwidth than the H100.

With NVIDIA’s powerful HGX and DGX SuperPOD architectures, CDS enables enterprises to harness massive computational power for AI training, deep learning, and large-scale data analytics.

Contact Our Experts

CDS is transforming its infrastructure to become AI-centric, focusing on integrating advanced artificial intelligence capabilities across its operations. By upscaling to an AI-driven infrastructure, CDS aims to enhance data processing, automate decision-making, and improve operational efficiency.

CDS offers a broad range of GPU resources designed to meet diverse computing needs, from AI and machine learning to complex data analytics and high-performance simulations.

CDS offers cost-effective AI computing solutions designed to meet the demands of modern businesses.

CDS is purpose-built for AI use cases, offering tailored solutions designed to accelerate the adoption and performance of artificial intelligence technologies.

CDS offers “One Platform,” a versatile solution that provides a range of compute flavors tailored to meet diverse business needs.

CDS specializes in building AI solutions efficiently, leveraging cutting-edge technology and streamlined processes to deliver robust and scalable systems.